“The Working Principle and Models Used by Modern Deep Learning Programs for Signal Recognition”

Introduction

In recent years, deep learning methods have made significant progress in the field of signal recognition and analysis. These technologies are applied in a wide range of fields, from medical diagnosis to speech recognition, image processing, and object detection. In this article, we will explore the working principle of deep learning programs used in signal recognition and the most commonly used models.

What is Deep Learning?

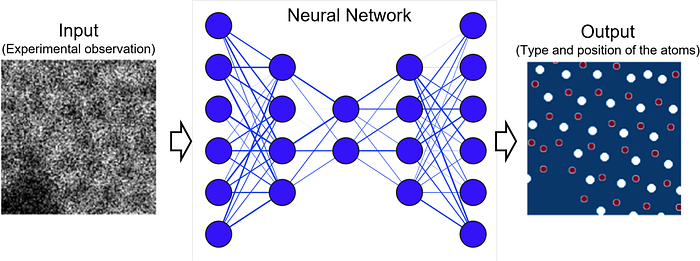

Deep learning is a subset of machine learning that uses multi-layered artificial neural networks to learn from data. Deep learning algorithms can identify complex features and patterns by working on large datasets.

Signal Recognition

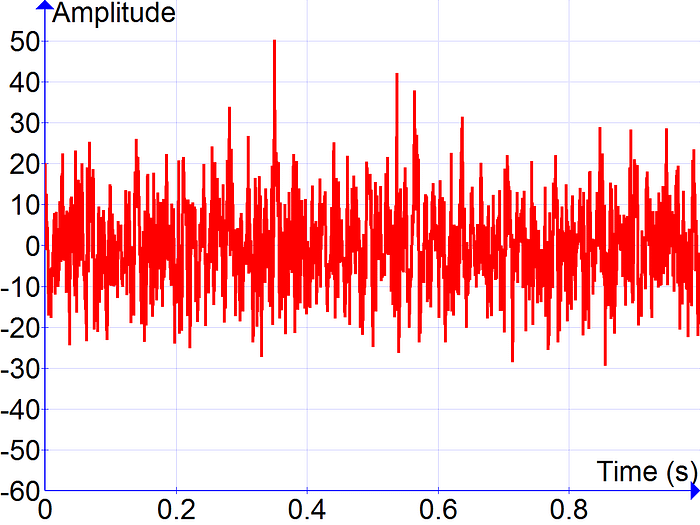

Signal recognition is the process of identifying and classifying specific features or patterns in a signal. These signals can be in various formats, such as audio, images, EEG, or ECG. Deep learning models can accurately classify these signals by identifying complex patterns.

Working Principle

Deep learning-based signal recognition systems typically follow these steps:

- Data Collection and Preprocessing: Signal data is collected and processed. This step may involve noise reduction, normalization, and data augmentation techniques.

- Feature Extraction: Meaningful features are extracted from raw signal data. In traditional methods, this step is done manually, whereas deep learning models automatically perform feature extraction.

- Model Training: The deep learning model is trained on labeled data. During this process, the model learns the patterns and relationships in the data.

- Model Validation and Testing: After the training process is complete, the model is evaluated on validation and test datasets.

- Prediction and Classification: The model gains the ability to recognize and classify new signals.

Models Used

1. Convolutional Neural Networks (CNN)

CNNs are widely used in image processing and signal recognition. They are highly effective at recognizing local features in a signal. CNNs can learn complex patterns and features through their layered structure.

2. Recurrent Neural Networks (RNN) and Long Short-Term Memory (LSTM)

RNNs are ideal for analyzing time series data and sequential signals. However, traditional RNNs struggle to learn long-term dependencies. LSTM models were developed to overcome this issue, as they can better learn long-term dependencies and patterns.

3. Autoencoders

Autoencoders are artificial neural networks used for data compression and feature extraction. They are particularly useful in applications such as anomaly detection and noise reduction.

4. Transformer Models

Transformers have achieved great success in natural language processing and sequential data analysis. Recently, they have also been used in signal processing and time series analysis. These models offer high performance due to their parallel processing capabilities and attention mechanisms.

Application Areas

- Medical Diagnosis: Analysis of biomedical signals such as EEG and ECG for disease diagnosis.

- Speech Recognition: Analysis of audio signals and development of speech recognition systems.

- Image Processing: Analysis of image signals for object recognition and image classification.

- Anomaly Detection: Detection of abnormal signals and behaviors in industrial systems and network security.

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense

from tensorflow.keras.preprocessing.image import ImageDataGenerator

# Load and preprocess the dataset

train_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

‘data/train’,

target_size=(64, 64),

batch_size=32,

class_mode=’binary’

)

validation_datagen = ImageDataGenerator(rescale=1./255)

validation_generator = validation_datagen.flow_from_directory(

‘data/validation’,

target_size=(64, 64),

batch_size=32,

class_mode=’binary’

)

# Create the CNN model

model = Sequential([

Conv2D(32, (3, 3), activation=’relu’, input_shape=(64, 64, 3)),

MaxPooling2D(pool_size=(2, 2)),

Conv2D(64, (3, 3), activation=’relu’),

MaxPooling2D(pool_size=(2, 2)),

Flatten(),

Dense(128, activation=’relu’),

Dense(1, activation=’sigmoid’)

])

# Compile the model

model.compile(optimizer=’adam’, loss=’binary_crossentropy’, metrics=[‘accuracy’])

# Train the model

model.fit(

train_generator,

steps_per_epoch=100,

epochs=10,

validation_data=validation_generator,

validation_steps=50

)

# Evaluate the model

loss, accuracy = model.evaluate(validation_generator)

print(f’Validation Loss: {loss}’)

print(f’Validation Accuracy: {accuracy}’)

Conclusion

Deep learning has provided revolutionary advancements in the field of signal recognition and analysis. Models like CNN, RNN, LSTM, Autoencoder, and Transformer offer high accuracy and performance across various signal types. In the future, we can expect further development of these technologies and the emergence of new application areas. The continuous evolution of deep learning-based signal recognition technologies will continue to provide innovative solutions in a wide range of fields, from medical diagnostics to everyday devices.